LLM像GPT一样“理解”文本

我几乎肯定你以前用过LLM(大型语言模型)。也许是GPT、LLaMA、Gemini或Claude。但你真的知道这些模型如何“理解”你输入的单词和句子吗?如果不知道,这里是一个简要总结:

我会使用句子“你好,我的名字是大卫。”

- 模型处理这个句子的流程始于标记化,将您的文本转换为计算机可以处理的格式。在这个例子中,这个句子的实际标记是:

- token_type_ids: ([[0, 0, 0, 0, 0, 0, 0, 0, 0]]) 令牌类型 ID:([[0, 0, 0, 0, 0, 0, 0, 0, 0]])

- 注意力掩码:张量([1, 1, 1, 1, 1, 1, 1, 1, 1])

这就像你的身份证号码一样。它本身并不提供关于你的任何信息;它只是作为一个标识符。同样,模型使用这些标记来识别句子中的每个单词。

3. 现在,这些令牌被用作模型的输入,然后生成嵌入。基于它的预训练,嵌入是模型对句子含义的实际表示。

这是使用名为bert-base-multilingual-uncased的LLM对短语“你好,我的名字是大卫”进行真正的嵌入。

"Hello my name is David" = ([-6.3067e-02, -6.5003e-02, 5.8351e-02, 1.5615e-02, -2.8820e-01,

1.1979e-01, -1.0806e-01, 5.2061e-02, -1.9341e+00, -2.3762e-02,

-6.2240e-02, -1.7020e-01, 7.8117e-02, 2.5818e-03, 1.7843e-01,

1.2076e-01, 9.7030e-02, -5.6334e-02, -1.6212e-02, 9.8563e-02,

-3.8563e-02, 2.1909e-01, -8.8794e-02, -1.0928e-01, 6.9245e-01,

-1.3860e-01, 9.4015e-02, -6.3359e-03, -2.0649e+00, 3.1342e-02,

-3.4443e-01, -4.3578e-02, -6.4098e-02, 1.3312e-01, 6.4332e-02,

-1.2022e-01, 1.4226e-01, 1.7373e+00, -4.9053e-02, -2.6366e-02,

-6.0595e-02, -3.4298e-02, -5.9610e-02, 9.3733e-02, -1.1392e-01,

-7.4029e-02, 6.6102e-03, 4.6267e-02, -1.1696e-01, -2.2648e-02,

-7.6536e-02, 1.1436e-01, -9.9977e-02, 4.7857e-02, -6.1316e-02,

-7.9082e-02, 1.1920e-03, 1.5700e-02, -5.1274e-02, 7.5583e-02,

1.9443e+00, 2.2157e-02, -6.8364e-02, -5.4251e-02, 1.3364e-01,

6.3926e-02, -1.7416e-01, -3.1813e-02, -4.7481e-02, -3.9059e-02,

7.4262e-02, 1.2101e-01, 7.3585e-02, -9.8143e-02, -9.7638e-02,

-1.8205e-01, 4.3332e-01, 6.4791e-02, 9.0205e-02, -7.1362e-02,

6.8390e-02, 3.2625e-02, -1.3394e-01, 4.8559e-02, -3.2671e-01,

3.4458e-02, 1.0503e-02, -9.2556e-03, -7.2692e-02, -1.5348e-01,

3.8256e-01, -2.4210e-03, -6.4833e-02, 1.9365e-01, 4.8983e-02,

-1.2900e-03, -2.2435e-01, 1.7160e+00, -9.9915e-02, 1.8914e-01,

-2.7106e-02, 1.2817e-01, -2.2345e-02, 8.2129e-02, 3.2404e-02,

5.9931e-02, 3.1020e-03, 1.2666e-01, -1.7630e-03, -1.1525e-02,

6.5068e-01, -2.0728e-01, 2.1018e-01, -1.8153e-01, -7.4626e-02,

-3.3399e-02, -1.2778e-01, -1.2191e-01, -4.5719e-02, 6.2992e-03,

5.8922e-02, 1.5226e+00, -9.6009e-02, -3.1425e-01, -2.4100e-01,

-4.8002e-03, -8.3903e-02, 5.3542e-02, -4.3094e-02, 3.2876e-02,

1.9106e-02, -4.7906e-02, 1.0480e+00, -2.9698e-01, 1.4678e-01,

1.6903e-01, -1.2754e-01, -1.2511e-03, 1.1102e-01, -3.8414e-02,

2.6777e-02, -1.9369e-01, 1.1017e-01, -8.3976e-02, -6.1403e-02,

-1.1468e-01, 2.3421e-01, 4.5592e-02, -3.7761e-02, -3.5982e-02,

6.4221e-02, 1.5828e-01, -4.0606e-02, -6.9611e-03, -1.1011e-01,

3.2153e-02, 2.2642e-02, -4.6714e-01, -2.3431e-02, -3.0966e+00,

-3.7265e-02, 5.1370e-02, 7.1625e-02, 6.7444e-02, 1.2767e+00,

-2.7577e-03, -8.8830e-02, 3.2891e-02, -1.1851e-01, 2.9493e-02,

-2.4684e-02, 3.0232e-03, -1.1200e-02, -5.4550e-02, -2.4983e-01,

1.2650e-01, -9.7227e-02, -6.5607e-02, 2.0831e-03, 3.8514e-02,

-1.2599e-01, 2.0247e-01, -1.7432e-01, -2.7520e-03, -8.8222e-02,

4.5206e-02, -1.7182e-02, -2.1485e-01, -3.5201e-02, -1.4416e-01,

1.1249e-01, -7.0429e-02, 8.2445e-02, 1.5059e-02, -8.6289e-02,

-1.5666e-01, 3.6360e-02, 1.3187e-01, -9.6132e-02, 5.3311e-02,

-5.3906e-03, 2.4517e-02, 3.7261e-02, 1.5909e-01, -1.0021e-02,

-3.5074e-02, -3.9212e-02, -1.8540e-02, 4.3584e-03, -4.1472e-02,

2.7728e-02, -6.1495e-02, -1.9105e-01, -1.9360e-02, -1.0231e-01,

-7.3099e-02, -1.6689e-01, 8.3387e-02, -1.1941e-04, 1.8164e-01,

-3.5713e-02, -2.1215e-02, -2.4135e-02, 5.7111e-02, -1.1214e-01,

-1.8545e-03, -9.4029e-02, 4.4611e-02, -1.2727e-01, -9.5095e-02,

-1.0796e-01, -8.2994e-02, -1.7836e-01, -3.0736e-02, 1.2586e-01,

-3.3929e-01, -9.4927e-02, 3.0953e-02, 6.4855e-02, 7.4711e-04,

6.4278e-02, -1.1293e-01, 6.6059e-02, -3.6809e-03, 1.5743e+00,

9.7339e-02, -1.0696e-03, -1.4953e-01, 1.6769e-01, -3.8103e-02,

1.3830e-02, -6.1597e-02, -1.6302e+00, 7.2874e-02, -1.0372e-01,

-1.2309e-01, 1.4384e-01, 4.8703e-02, 4.1658e-02, 6.0290e-02,

-1.5625e+00, -8.3253e-02, 6.6407e-02, -6.5508e-02, -6.8948e-02,

-2.2298e-01, 6.7682e-02, -1.9633e-02, -5.8423e-02, -1.7092e-01,

6.2720e-02, 7.3877e-02, 5.2816e-02, 3.3742e-02, 9.7525e-02,

-1.3811e-01, 5.8613e-02, 1.5460e-01, -8.0117e-02, -8.0988e-02,

-1.3124e-01, -1.9548e-01, -1.2079e-01, -1.6331e-01, 1.7407e-02,

-8.8708e-02, 2.9301e-02, 2.5266e-01, 1.0793e-01, 4.5512e-02,

-3.0187e+00, -3.7133e-03, 2.8234e-01, 4.2589e-02, 5.5785e-02,

9.9098e-03, -1.8502e-01, 1.8162e-01, -6.8798e-02, -5.8463e-02,

-5.9285e-02, -1.2853e-01, 6.9022e-02, 1.1898e-01, 4.4205e-03,

-2.3582e-01, 6.9759e-02, 1.3476e-01, -4.9181e-01, 3.4814e-02,

-1.5499e+00, -6.5551e-02, -7.0452e-02, -1.4871e-02, -1.7225e-01,

-1.5752e-01, -2.9805e-02, -7.9483e-02, -7.0499e-02, -7.6339e-02,

7.6202e-02, 5.1031e-02, -1.2585e-02, -1.1090e-01, -7.3490e-02,

1.1477e-02, -4.7813e-02, 1.1502e-01, 9.0828e-02, -1.2063e-01,

-7.5006e-02, 1.3771e-01, -4.2939e-02, -4.1942e-02, 5.2841e-02,

-3.4773e-02, 2.1186e-02, 2.4575e-03, 1.1969e-02, 8.5341e-02,

-4.7244e-02, -8.5974e-02, 1.7498e-02, -2.5833e-02, 1.5605e-01,

-2.1798e-01, 1.2778e-02, -8.0727e-02, -1.1978e-01, -5.3195e-02,

-2.3644e-01, -7.6019e-02, -1.5959e-02, -1.4808e-01, 1.0978e-01,

-1.1879e-01, 6.6888e-02, 5.0088e-03, 7.4238e-02, -5.2953e-02,

-9.0626e-02, 4.2202e-02, -8.4847e-02, 5.1210e-02, 1.7135e-02,

-2.1636e+00, 1.1707e-01, 1.4536e-01, -4.3533e-02, -4.0685e-02,

1.5861e-01, -5.0607e-02, 9.7592e-01, 7.3470e-02, -4.0372e-02,

-2.1572e-02, -2.1161e-02, 2.1003e-01, -3.8847e-01, 4.2965e-03,

7.3182e-02, -3.2723e-04, -2.6543e-02, -1.1692e-01, -1.1041e-02,

-6.3984e-02, 8.0302e-02, 6.4713e-02, -5.9662e-02, 1.4272e-02,

-1.5337e-02, 1.0357e-01, -1.2674e-02, -2.9119e-01, 3.1614e-02,

-6.7065e-01, 2.9093e-02, -4.8170e-03, -2.5485e-01, 7.1802e-01,

-1.2004e-01, 3.4008e-02, -3.7612e-02, 1.5460e+00, 6.3667e-02,

2.4566e-02, 1.9012e-01, 4.2099e-01, -8.3971e-02, -6.7866e-01,

-1.8864e+00, 5.6499e-02, 1.7758e-01, -2.8147e+00, 5.9844e-02,

-9.5785e-02, -2.8091e-03, -8.3825e-02, -3.1631e-01, 1.0209e-01,

1.2545e-02, -1.7500e+00, -2.4430e-02, -8.6223e-02, 1.2687e-01,

-1.3505e-01, 2.7337e-02, 2.0612e-01, 1.3671e+00, 1.3369e-01,

1.7137e-01, -8.7122e-02, -1.4447e-02, -9.9240e-02, -1.0286e-02,

-1.1533e-01, 1.4692e-01, 1.7028e-01, 5.8127e-04, -9.9905e-02,

4.1405e-02, 2.5269e-02, -4.7969e-02, 1.3755e-03, -1.1023e-01,

8.1470e-02, 8.5869e-03, 2.5242e+00, 3.6176e-02, 1.0574e-01,

-6.8174e-02, 1.0276e-01, 1.2210e-01, 1.5842e-01, 5.7113e-02,

1.1842e-02, 4.9331e-02, 1.7497e-02, 5.8974e-02, 2.0468e+00,

-5.5540e-02, 2.9182e-02, 5.8791e-03, 2.6187e-01, -1.3443e-01,

-4.9500e-02, -3.1041e-02, -1.6273e-02, 2.2521e-01, -2.2524e-01,

1.9471e-01, 5.4658e-01, -5.1764e-02, -2.8641e-02, 8.6076e-02,

-3.0763e-02, 5.0747e-02, -6.3990e-02, -2.3135e-01, -8.3855e-02,

7.0134e-03, -1.8400e-02, 2.3643e-02, 1.0144e-02, 1.1015e-01,

-4.2524e-02, -9.7753e-03, 1.9581e-01, 3.5429e-02, 2.8661e-02,

-7.8723e-02, -4.4352e-02, -4.2486e-03, 5.5825e-02, -1.3521e+00,

-1.4316e-01, -5.5181e-03, -9.0850e-02, 1.5423e-01, 1.5931e+00,

1.8909e-02, -5.3177e-02, 6.4451e-02, 1.1712e-01, -1.1272e-02,

-1.5884e-01, 1.6033e+00, -1.3048e-01, -1.6845e-01, -1.0158e-01,

1.3358e-01, 8.4550e-02, -7.1393e-02, 1.3750e-01, -4.5543e-02,

-4.2227e-02, 9.4443e-02, 9.5331e-02, 4.7788e-02, 5.6615e-03,

9.5981e-02, -1.4945e-01, 6.1859e-02, -9.8724e-02, 2.0646e-01,

4.0786e-02, -3.4560e-02, 8.6122e-02, 1.2271e-02, 1.5503e+00,

3.5104e-02, 3.8816e-02, 1.6699e-01, -1.5367e-01, 9.9586e-02,

8.5749e-02, 1.0553e-01, -1.1082e-01, 1.2513e-01, -3.1657e-02,

-7.6720e-02, -1.6409e-01, -4.7703e-02, 1.2519e+00, -5.2303e-02,

1.2182e-01, -3.8448e-01, 4.9344e-01, 3.6140e-02, -1.9562e-02,

-2.2346e-01, -8.2244e-02, 1.2129e-01, 1.3974e-02, -3.1754e-02,

4.5216e-01, -6.2957e-02, 7.4347e-02, -1.0129e-01, -1.3847e-01,

-2.1854e-02, 2.1551e-02, -2.8596e-02, -2.4954e+00, -9.0582e-02,

9.2781e-02, 2.8728e-02, 4.2555e-03, 1.7817e-02, 4.7565e-02,

2.9899e-01, 1.4331e-01, -6.1007e-02, -1.1640e-02, 1.2662e-01,

9.2517e-02, 4.1011e-02, 4.5432e-03, -2.2670e-01, 1.0026e-01,

-3.7663e-02, 1.1720e-01, 7.5437e-02, 1.5296e-01, 1.5637e-01,

6.5562e-02, 6.4253e-02, -1.9596e-03, 1.7449e-02, -1.8204e-01,

-5.8701e-02, -1.5018e-02, 1.6898e-01, -3.2648e-02, -1.2541e-02,

-4.6273e-02, -3.0516e-02, -9.3489e-02, 1.1024e-01, 4.9948e-01,

-1.1470e+00, 7.2239e-02, 1.0472e-01, 1.2440e-01, 1.4803e+00,

-3.7044e-03, 1.0590e-01, -1.7400e-01, -1.7261e-01, 1.4460e-01,

-7.4149e-02, 1.4764e-02, -1.0580e-01, 1.8227e-01, 1.0410e-01,

-6.6081e-02, 1.0029e-02, 1.5268e-01, 7.9691e-02, -1.0936e-01,

1.6692e-01, -9.1661e-02, -1.0928e-01, -1.0173e-01, 5.6247e-02,

1.2121e-01, -1.3991e-01, 7.9703e-02, -5.5685e-02, 7.2104e-02,

-1.6365e+00, -9.2635e-02, -6.0941e-03, -4.3209e-02, 9.3835e-02,

-1.7847e-01, 1.2173e+00, 9.1381e-02, -6.2800e-02, -9.5107e-02,

1.3349e-01, 1.9345e+00, -1.0302e-01, 3.1774e-02, -3.7533e-02,

-2.9592e-01, 8.2011e-02, 1.5798e-01, -1.9119e-01, 1.2555e-01,

-2.1048e+00, -2.7687e-02, -6.2751e-02, -1.6222e-03, -1.6513e+00,

-7.7844e-02, -1.1261e-01, -5.4261e-02, -5.6410e-02, 9.1984e-02,

-2.6309e-02, 3.3499e-01, -2.0995e-01, -1.1120e-01, -6.6236e-02,

-5.6154e-02, 9.7592e-03, -7.8325e-02, 1.3913e+00, -2.2296e+00,

-1.3679e-02, -1.4830e-01, 1.6990e-02, 1.3857e-01, 2.0402e-02,

3.3725e-01, 1.7047e+00, 6.7117e-02, 1.1202e-01, -1.2594e-01,

1.0127e-01, 2.7514e-02, 1.0928e-02, -1.1245e-01, -4.2177e-02,

1.0642e-01, -5.2709e-02, -5.7320e-02, 5.9015e-02, 2.2151e-01,

-4.1481e-02, -1.5869e-01, 1.9182e-02, -1.7970e+00, -2.0666e-02,

7.0250e-02, -7.4218e-03, -1.0282e-01, 2.0588e+00, -1.0758e-01,

2.0317e-01, 6.6094e-02, 5.2337e-02, -3.2627e-02, -4.1721e-02,

-5.9252e-02, -9.7024e-03, 1.4159e-01, -2.2132e-01, -5.5605e-01,

1.6207e-01, -5.5934e-02, 5.0485e-02, 9.1186e-02, -4.9125e-02,

-1.0306e-01, -1.6561e-01, 1.4910e-01, 1.9850e-01, -1.4786e-02,

-6.6644e-02, -1.3049e-01, -1.2594e-02, 7.8968e-02, -7.2599e-02,

-1.2121e-02, 1.2976e-02, 2.0674e-01, -1.6264e+00, 1.0526e-01,

1.8668e-01, 1.5671e+00, 1.1426e-01, -1.8295e-01, 1.4920e+00,

9.8368e-02, 1.5032e-01, 3.1676e-02, 7.2132e-02, -3.8246e-02,

1.6280e-02, -1.3402e-01, -1.7881e+00, -2.2577e+00, -8.5013e-02,

4.4849e-02, 9.2129e-02, 4.8456e-02, -1.3977e-01, 4.1408e-02,

1.5334e-01, -7.1907e-02, 4.0161e-02, 3.0400e-02, -1.2430e-01,

3.8519e-02, 2.1779e-02, 1.1877e+00, 1.2563e-01, 2.0834e+00,

-1.7978e-01, -1.2695e-01, 9.9676e-02, -1.1639e-01, -1.4845e-02,

-2.9157e-02, -6.5303e-02, 1.8666e-02])

这个张量捕捉了每个单词的上下文信息,不仅是它代表的单词是什么,还有它与句子和语境中其他单词的关系,这是基于模型的预训练。

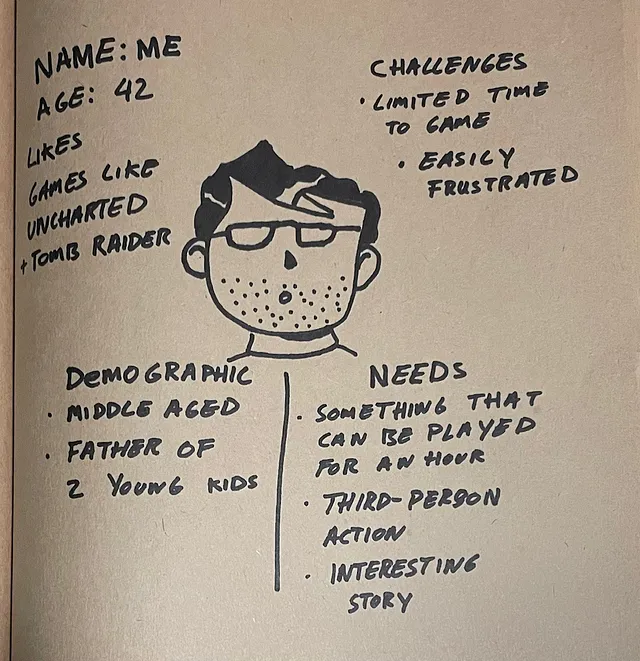

也许使用类比是一个好主意:

- 想象一下,你正在使用你的身份证申请工作。

- 招聘人员通过您身份证上的数字(令牌化)来识别您,以确认您的身份而不是其他人。

- 现在,招聘人员开始通过向您询问一个预先设计的100个问题测试(类似于预训练模型)来创建您的嵌入,您的回答范围在0和10之间。(例如,一个问题可以是:“你有多喜欢团队合作?0代表‘我讨厌它’,10代表‘我不能一个人工作!’)

- 一旦测试完成,招聘人员将以以下格式得到您的特征精确的概要:[1, 3, 6, 2, 7, 4, 5, 9, 2, 4, 3, 8, 10, 8, 6, 3, 4, 3, 1, 8, 5, 0, 5, 2, 3, 0, 5, 1, 2, 2, 3, 5, 7, 8, 9, 0, 8, 6, 4, 4, 6, 8, 0, …, 5]

有了这些信息,招聘人员会进入招聘流程的下一阶段,相当精确地了解你是什么样的人。然而,如果他们观察到你的初始简历需要调整,他们可能会在每个阶段调整这些数字。

这是一个简单而准确的描述LLM如何工作。当然,不同的模型之间会有一些差异—嵌入大小、标记化方法等—但总体过程是相似的。

我希望这能帮到你。再见!