在医疗领域利用GPT和Qdrant构建多模态人工智能

这个博客/项目的相关代码在这里。

人工智能正在迅速改变一切。新工具和API几乎每天都在更新,保持更新是一项不容易的任务。幸运的是,许多优秀的封装器(不是说唱歌手!)简化了这一过程,使构建任何用例的人工智能工具变得比以往更容易。

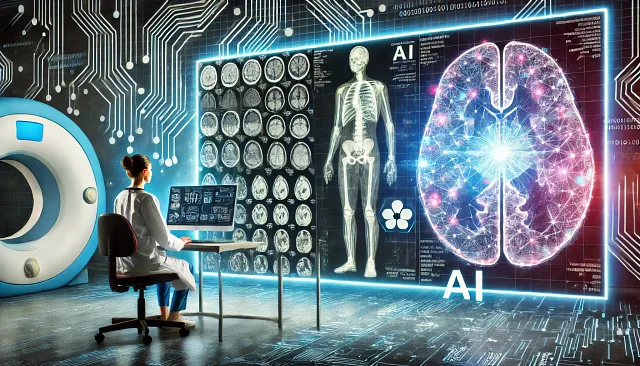

最近,Abhishek和我尝试了一个项目,就是为了做到这一点。相信我——这比你想象的要容易!我们的目标是通过创建一个工具来帮助放射科医生,这个工具不仅可以分析医疗报告和图像,还可以提出可能的下一步措施,为他们的患者提供最好的护理。

在技术术语中,这是一个RAG(检索增强生成)系统。但对我们来说,这更像是一个“从RAG到富裕”的故事,其中“富有”意味着生产力。而谁不希望这样,特别是当医生已经投入了长时间工作时?这个项目旨在为他们提供额外的帮助。

所以,让我们深入了解我们是如何构建它的!

所以,什么是多模态人工智能,我们是如何实施它的?

多模态人工智能涉及将不同类型的数据(如文本、图像和音频)集成到一个能够处理复杂信息并模仿人类认知方式的单一模型中。这种方法允许人工智能系统利用多个信息来源来全面理解一个主题。例如,在医疗领域,多模态模型可以分析医学图像和文本病人记录,以提供更准确的诊断见解。

未来人工智能是多模态的,因为它符合人类通过多种感官解释和处理信息的方式。这种能力使得更加强大、能够适应不同应用场景的上下文感知模型得以实现,这些应用包括医疗保健、自动驾驶和内容生成。

在这个项目中,我们旨在展示如何使用GPT-4o建立一个多模态人工智能系统,用于自然语言理解以及使用Qdrant来管理和查询矢量嵌入。该系统将结合文本和图像数据,为医疗健康应用提供一个强大的诊断工具。

先决条件

在开始之前,请确保您已经具备以下条件:- 安装了Python 3.8+。- GPT-4o和Qdrant的API密钥。- 安装了诸如fastembed、qdrant-client、openai、gradio等依赖项。(不用担心,这些也在requirements.txt文件中提到,仓库链接在最后提到。)- 数据集:医学扫描和相应的病人记录以文本格式。 (下面有解释。)- 耐心(这是最重要的,你知道的!)

数据集

对于这个项目,我们正在使用医学影像对象语境(ROCO)数据集,这是一个大规模的医学影像数据集,包括数千个匿名标题和相应的医学扫描。

ROCO数据集专门针对放射学领域而设计,非常适合开发多模态模型,分析医学图像和相关文本信息。

在仓库中,我已经在数据文件夹中准备了ROCO数据集的一个子集,其中包含各种医学扫描和相关文字描述。这个样本包括一些代表不同医学状况的图像,足以展示项目的能力。

您可以在这里访问完整数据集。在复制存储库后,有一个脚本可以运行,它将在您的本地计算机上下载数据集。

确保您查看数据集的许可和可访问性指南以供研究目的。

建筑

这是一个相当直观的架构,介绍了我们项目的结构。

实施多模 AI 的步骤

1. 注册GPT-4o和Qdrant:

- GPT-4o:在OpenAI平台上创建账户并获取API密钥,以便访问GPT-4o的自然语言处理能力。请参考此指南获取API密钥。https://www.merge.dev/blog/chatgpt-api-key- Qdrant:在Qdrant网站上注册,以便有效管理和查询您的向量嵌入。我们将在本项目中将我们的向量嵌入存储在本地。Qdrant的Python封装足够详细,可以帮助我们用几个命令完成这项任务。

2. 数据准备和嵌入创建:

文本嵌入 - 为了将患者记录转换为向量嵌入,我们使用FastEmbed库。每条记录都被转换为向量表示,其中包括患者ID、诊断和症状等元数据。

图像嵌入-为了将医学图像转换为向量嵌入,我们使用CLIP模型。每个图像被处理成一个向量,并且与之一起存储了元数据,如扫描类型和诊断。

文件:src/embeddings_utils.py

from typing import List

from fastembed import TextEmbedding, ImageEmbedding

TEXT_MODEL_NAME = "Qdrant/clip-ViT-B-32-text"

IMAGE_MODEL_NAME = "Qdrant/clip-ViT-B-32-vision"

def convert_text_to_embeddings(documents: List[str], embedding_model: str = TEXT_MODEL_NAME) -> List:

text_embedding_model = TextEmbedding(model_name=embedding_model)

text_embeddings = list(text_embedding_model.embed(documents)) # Returns a generator of embeddings

return text_embeddings

def convert_image_to_embeddings(images: List[str], embedding_model: str = IMAGE_MODEL_NAME) -> List:

image_model = ImageEmbedding(model_name=embedding_model)

images_embedded = list(image_model.embed(images))

return images_embedded在Qdrant中存储嵌入向量:

在Qdrant向量数据库中存储文本和图像嵌入。每个嵌入关联的元数据有助于稍后执行精确的相似性搜索。

名为“文本”和“图像”的向量是对每个输入方式结构化嵌入的引用。通过对它们进行命名,我们可以在处理过程中轻松区分文本和图像数据。这确保了模型适当处理每个输入,并在检索或内容生成等任务中有效地结合它们,提高系统的准确性和连贯性。

文件:src/create_data_embeddings.py

import os

import uuid

import pandas as pd

from fastembed import TextEmbedding, ImageEmbedding

from qdrant_client import QdrantClient, models

from src.embeddings_utils import convert_text_to_embeddings, convert_image_to_embeddings, TEXT_MODEL_NAME, \

IMAGE_MODEL_NAME

DATA_PATH = '/Users/sarthak/Documents/Work/Personal_Projects/healthcare_multimodal_ai/data/'

def create_uuid_from_image_id(image_id):

NAMESPACE_UUID = uuid.UUID('12345678-1234-5678-1234-567812345678')

return str(uuid.uuid5(NAMESPACE_UUID, image_id))

def create_embeddings(collection_name):

# Read captions txt data

path = DATA_PATH + 'captions.txt'

caption_df = pd.read_csv(path, sep='\t', header=None, names=['image_id', 'caption'])

# Read images

image_directory = os.listdir(DATA_PATH + 'images')

# Filter out images that are not in the captions

images = []

for image in image_directory:

if image.split('.')[0] in caption_df['image_id'].values:

images.append(image)

# Create image_id, caption, image_path list of dictionaries

image_docs = []

for image in images:

image_id = image.split('.')[0]

caption = caption_df[caption_df['image_id'] == image_id]['caption'].values[0]

image_path = DATA_PATH + 'images/' + image

image_docs.append({'image_id': image_id, 'caption': caption, 'image_path': image_path})

# Convert text to embeddings using Fastembed and images to embeddings using CLIP

captions = [doc['caption'] for doc in image_docs]

embeddings = convert_text_to_embeddings(captions)

for idx, embedding in enumerate(embeddings):

image_docs[idx]['caption_embedding'] = embedding

# Convert image to embeddings using CLIP

image_embeddings = convert_image_to_embeddings([doc['image_path'] for doc in image_docs])

for idx, embedding in enumerate(image_embeddings):

image_docs[idx]['image_embedding'] = embedding

# Save the embeddings to vector database

client = QdrantClient(":memory:")

text_model = TextEmbedding(model_name=TEXT_MODEL_NAME)

text_embeddings_size = text_model._get_model_description(TEXT_MODEL_NAME)["dim"]

image_model = ImageEmbedding(model_name=IMAGE_MODEL_NAME)

image_embeddings_size = image_model._get_model_description(IMAGE_MODEL_NAME)["dim"]

if not client.collection_exists(collection_name):

client.create_collection(

collection_name=collection_name,

vectors_config={

"image": models.VectorParams(size=image_embeddings_size, distance=models.Distance.COSINE),

"text": models.VectorParams(size=text_embeddings_size, distance=models.Distance.COSINE),

}

)

client.upload_points(

collection_name=collection_name,

points=[

models.PointStruct(

# Convert image_id to UUID

id=create_uuid_from_image_id(doc['image_id']),

vector={

"text": doc['caption_embedding'],

"image": doc['image_embedding'],

},

payload={

"image_id": doc['image_id'],

"caption": doc['caption'],

"image_path": doc['image_path']

}

)

for doc in image_docs

]

)

return client

4. 查询转换和相似搜索:

我们将用户的查询(文本或图像)转换为矢量嵌入,并在四分数据库中执行相似性搜索,以找到最相关的文本或图像。这允许进行“按文本查询”和“按图像查询”,提高了检索准确性跨模态。

文件:src/embeddings_utils.py

# Search for similar text and get corresponding images as well

def search_similar_text(collection_name, client, query, limit=3):

text_model = TextEmbedding(model_name=TEXT_MODEL_NAME)

search_query = text_model.embed([query])

search_results = client.search(

collection_name=collection_name,

query_vector=('text', list(search_query)[0]),

with_payload=['image_path', 'caption'],

limit=limit,

)

return search_results

# Search for similar images and get corresponding text as well

def search_similar_image(collection_name, client, query_image_path, limit=3):

# Convert the query image into an embedding using the same model used for image embeddings

image_embedding_model = ImageEmbedding(model_name=IMAGE_MODEL_NAME)

# Embed the provided query image (assumed to be a file path)

query_image_embedding = list(image_embedding_model.embed([query_image_path]))[0] # Embedding for the query image

# Perform the similarity search in the Qdrant collection for image embeddings

search_results = client.search(

collection_name=collection_name,

query_vector=('image', query_image_embedding),

with_payload=['image_path', 'caption'], # Fetch image paths and captions as metadata

limit=limit,

)

return search_results

def merge_results(text_results, image_results):

# Combine based on some metadata, or simply concatenate

combined_results = text_results + image_results

return combined_results

5. 使用GPT-4o进行响应生成:

我们使用检索到的数据来提示GPT-4o模型,并生成基于相关上下文的详细响应。

确保给模型一个详细直观的角色。这样可以帮助您得出更加合适的结果。

文件:src/gpt_utils.py

import base64

import json

import requests

from config import Config

class GPTClient:

def __init__(self):

self.api_key = Config.OPENAI_API_KEY

self.api_url = "https://api.openai.com/v1/chat/completions"

def query(self, prompt, retrieved_contexts, user_image=None):

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {self.api_key}"

}

chatbot_role = """

You are a radiologist with an experience of 30 years.

You analyse medical scans and text, and help diagnose underlying issues.

"""

# Initialize message structure with the system prompt

messages = [

{"role": "system", "content": chatbot_role},

{"role": "user", "content": [

{"type": "text", "text": prompt}

]}

]

# Add the user-uploaded image (if any)

if user_image:

with open(user_image, "rb") as image_file:

base64_image = base64.b64encode(image_file.read()).decode('utf-8')

messages[1]["content"].append({

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

})

messages[1]["content"].append({

"type": "text",

"text": "Additional context that you may use as a reference. Use them if you feel they are relevant to "

"the case."

"NOTE: They are not the patient's images. They are of other patients which can be used as a "

"reference, if required."

})

# Add the retrieved contexts, which should include both captions and corresponding images

for context in retrieved_contexts:

caption = context.payload['caption']

image_path = context.payload['image_path']

# Add the caption to the message

messages[1]["content"].append({

"type": "text",

"text": f"Caption: {caption}"

})

# Add the corresponding image to the message

with open(image_path, "rb") as image_file:

base64_image = base64.b64encode(image_file.read()).decode('utf-8')

messages[1]["content"].append({

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

})

# Prepare the payload for the GPT API

data = {

"model": "gpt-4o",

"messages": messages,

"max_tokens": 600

}

# Send the request to the API

response = requests.post(self.api_url, headers=headers, data=json.dumps(data))

return response.json()

def process_response(self, response):

if 'choices' in response and len(response['choices']) > 0:

return response['choices'][0]['message']['content']

6. 将所有部分联系在一起:

这里,我们正在设置多模式RAG系统类。process_query方法将协调检索并将查询传递给ChatGPT。

文件:src/multimodal_rag_system.py

from src.create_data_embeddings import create_embeddings

from src.embeddings_utils import search_similar_text, search_similar_image, merge_results

from src.gpt_utils import GPTClient

COLLECTION_NAME = "medical_images_text"

class MultimodalRAGSystem:

collection_name: str

def __init__(self):

self.gpt_client = GPTClient()

self.qdrant_client = create_embeddings(COLLECTION_NAME)

self.collection_name = COLLECTION_NAME

def process_query(self, query, query_image_path=None, top_k=3):

# 1. Text-based search if query is textual

search_results_text = search_similar_text(self.collection_name, self.qdrant_client, query, limit=top_k)

# 2. Image-based search if a query image is provided

search_results_image = []

if query_image_path: # Only perform image retrieval if an image path is provided

search_results_image = search_similar_image(self.collection_name, self.qdrant_client, query_image_path, limit=top_k)

# 3. Combine both results - merging text and image results

combined_results = merge_results(search_results_text, search_results_image)

# 4. Query GPT with the context and images

gpt_response = self.gpt_client.query(query, combined_results, query_image_path)

# 5. Process and return the response

return self.gpt_client.process_response(gpt_response)

7. 使用Gradio展示结果:

我们使用Gradio来创建一个交互式用户界面,用户可以输入文本查询和上传医学图像。系统将根据多模态数据提供详细响应。

文件: src/main.py

import gradio as gr

from PIL import Image

from multimodal_rag_system import MultimodalRAGSystem

# Initialize the MultimodalRAGSystem

system = MultimodalRAGSystem()

# Define the Gradio function that will process the user input and image

def chatbot_interface(user_query, user_image=None):

if user_image is not None:

# Convert numpy array to a PIL Image and save it temporarily

user_image = Image.fromarray(user_image)

user_image_path = ("/Users/sarthak/Documents/Work/Personal_Projects/healthcare_multimodal_ai/data"

"/user_input_image.jpg")

user_image.save(user_image_path)

else:

user_image_path = None

# Get the response from the Multimodal AI system

response = system.process_query(user_query, query_image_path=user_image_path)

return response

# Create the Gradio interface with text input and image input

interface = gr.Interface(

fn=chatbot_interface,

inputs=[gr.components.Textbox(lines=5, label="User Query"), gr.components.Image(label="Upload Medical Image")],

outputs=gr.components.Textbox(),

title="Multimodal Medical Assistant",

description="Ask medical-related questions and upload relevant medical images for analysis."

)

# Launch the Gradio interface

if __name__ == "__main__":

interface.launch()

输出

看哪!附加的屏幕截图显示了我们首次尝试上传扫描文件并获取与扫描相关的分析。在背景中,它通过检索相关案例研究来增进知识。

如果找不到相关图像,它将进行初步诊断并建议潜在的下一步行动。

下一步

我们可以通过提供尽可能多的历史案例的形式来改进这一点,例如:一个处理了数千例病例的诊断/影像中心将拥有大量数据,这些数据可以用来补充我们的查询,从而做出有根据的决策。

也可以对提示进行大量微调,这取决于应用程序的用例。

如果使用正确,这可能会成为放射科医生的绝妙工具,并且有可能将病例的处理时间提高2倍。

// 参考文献与资源

Github 仓库: https://github.com/iasarthak/healthcare_multimodal_ai

GPT-4文档:https://www.openai.com/research

Qdrant文档:https://qdrant.tech/documentation/

快速嵌入库:https://github.com/fastembed

剪报: https://arxiv.org/abs/2103.00020

对Abhishek Maurya大声致谢,他在这里提供了巨大的帮助。他曾在Jupiter实习,并且我在指导他的过程中有过最美好的经历。

如果你在这里,请在Medium上关注我,并在Linkedin上与我联系,更多地聊数据科学!