Sure, here's the text translated to simplified Chinese while keeping the HTML structure: ```html Chat GPT:究竟是什么原理?🔑 ``` In this translation: - "Chat GPT:" is the translation of "Chat GPT:" - "究竟是什么原理?" is the translation of "What’s under the hood?" - "🔑" is the emoji for "key"

Sure! Here’s the translation of the English text to Simplified Chinese while keeping the HTML structure: ```html (一个差点失败的尝试) 创建一个基于代码的 BN-GPT (Bhawna 的 Nano GPT) + 训练一个解码器-only Transformer: ``` ### Breakdown of Translation: - **(an almost failed attempt to)**: **(一个差点失败的尝试)** - **Creating a code-based BN-GPT (Bhawna’s Nano GPT)**: **创建一个基于代码的 BN-GPT (Bhawna 的 Nano GPT)** - **+ training a decoder only transformer**: **+ 训练一个解码器-only Transformer:** Feel free to use this translation in your HTML content! If you need any more adjustments or additions, let me know.

Certainly! Here's the translation of "What’s the point of doing all this?" in simplified Chinese while maintaining the HTML structure: ```html

这一切的意义是什么?

```Sure, here is the translated text in simplified Chinese, keeping the HTML structure intact: ```html

我宁愿读一堆研究论文,学习全新的编程语言,在有限的时间内拼命工作3、4天,也不愿意每月花20块钱来使用Chat GPT。(或许这看起来有点疯狂,但谁又在乎呢 🥱)

``` This HTML snippet translates to: "我宁愿读一堆研究论文,学习全新的编程语言,在有限的时间内拼命工作3、4天,也不愿意每月花20块钱来使用Chat GPT。(或许这看起来有点疯狂,但谁又在乎呢 🥱)" This translates back to English as: "I would rather read a bunch of research papers, learn a brand new programming language, work hard for 3 or 4 days in a limited time, than pay 20 bucks a month to use Chat GPT. (Maybe this seems a bit crazy, but who cares anyway 🥱)"Sure, here's the text translated into simplified Chinese while keeping the HTML structure intact: ```html

什么是Chat GPT? 🌐

```Certainly! Here's the HTML structure with the text translated into simplified Chinese: ```html

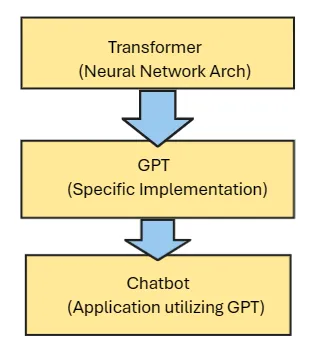

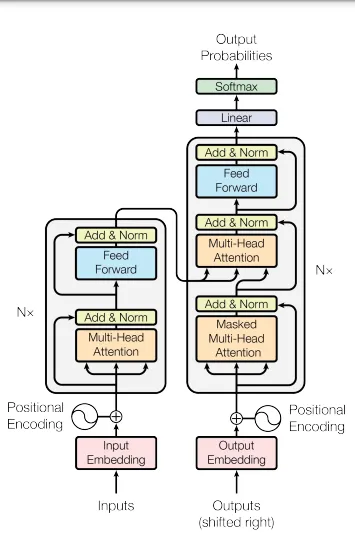

Chat GPT是一个大型语言模型(LLM),它在一个名为transformer的复杂神经网络下运行。Transformer是通过2017年由谷歌和多伦多大学的开发者发布的研究论文《注意力机制是你所需要的一切》引入的。它基本上提供了这个模型以及未来其他模型如何运作的结构。

``` In simplified Chinese: ```htmlChat GPT是一个大型语言模型(LLM),它在一个名为transformer的复杂神经网络下运行。Transformer是通过2017年由谷歌和多伦多大学的开发者发布的研究论文《注意力机制是你所需要的一切》引入的。它基本上提供了这个模型以及未来其他模型如何运作的结构。

```Sure, here is the translated text in simplified Chinese while keeping the HTML structure intact: ```html (变压器和神经网络的工作原理及训练方法将在本文后续部分详细介绍) ``` In this HTML snippet: - `` is used to wrap the translated Chinese text, ensuring it stands out as inline content within the HTML structure. - The translated text means "The principles of how transformers and neural networks work and are trained will be detailed in later parts of this article."

当然!以下是将“**What does GPT mean? 🤔**”翻译成简体中文的内容,同时保持 HTML 结构: ```html

GPT 是什么意思?🤔

``` 如果你需要其他 HTML 标签或有更复杂的结构需求,请告诉我,我可以进一步调整!Certainly! Here's the translation of the text into simplified Chinese, keeping the HTML structure: ```html 🧠 GPT代表生成预训练变压器。 ``` In this HTML snippet: - `` indicates that the following text is in simplified Chinese. - `🧠 GPT代表生成预训练变压器。` is the translated text, where "🧠" represents the brain emoji and "GPT stands for Generative Pre-trained Transformer."

```html

GPTs,最初由OpenAI引入,首次于2018年6月11日以GPT-1的形式问世,是一种变压器模型,具有以下特点:

```- Certainly! Here's the translation of "pre-trained (pretty obviously)" in simplified Chinese, keeping the HTML structure intact:

```html

预训练 (显然)

``` This HTML snippet will display the translated text in a preformatted format on a webpage. - Certainly! Here is the translation of "generate information (thanks to 1)" in simplified Chinese, while keeping the HTML structure: ```html 生成信息(感谢1) ``` In this HTML snippet: - "生成信息" translates to "generate information". - "感谢1" translates to "thanks to 1". These translations maintain the original meaning and structure in simplified Chinese.

Certainly! Here's the HTML structure with the translated text in simplified Chinese: ```html

神经网络获取数据,通过训练学习识别这些数据中的模式,然后预测类似数据集的输出。

``` In Chinese: 神经网络获取数据,通过训练学习识别这些数据中的模式,然后预测类似数据集的输出。Certainly! Here's the translated text in simplified Chinese while keeping the HTML structure intact: ```html 🧠 只需把它们看作你大脑中的神经网络。那里有数以万亿计的神经元相互连接,形成你大脑的神经网络。它们彼此连接,不是随机的,而是一种战略性的模式,使你能够训练自己,例如识别所有这些图像都是3,尽管它们外观不同: ``` This HTML text preserves the structure and includes the translated Chinese text within the appropriate tags.

Sure, here's the translated text in simplified Chinese, maintaining the HTML structure: ```html 你有没有觉得很惊讶,你的大脑将上述所有图像都解释为“3”? 😱 ``` This HTML snippet will display the translated text while preserving the structure for web contexts.

Certainly! Here's the translation of "Well, thanks to neural networks!" in simplified Chinese, keeping the HTML structure: ```html 嗯,多亏了神经网络! ``` This HTML snippet ensures the text is correctly marked as simplified Chinese for proper rendering and interpretation by browsers and other applications.

Sure, here's the text translated into simplified Chinese while keeping the HTML structure: ```html 现在,这如何应用于机器学习,特别是自然语言处理(NLP)或GPT? ``` In this translation: - "现在" means "now". - "这如何应用于" translates to "how does this apply to". - "机器学习" means "machine learning". - "特别是" means "specifically" or "especially". - "自然语言处理(NLP)" stands for "natural language processing (NLP)". - "或" means "or". - "GPT" remains as "GPT", which is often transliterated in Chinese texts. This translation should fit well within an HTML structure while conveying the meaning accurately in simplified Chinese.

Certainly! Here is the text translated into simplified Chinese, while maintaining the HTML structure: ```html Well GPT entirely works on a complex architecture of neural networks. ``` Translated text: ```html 嗯,GPT完全基于复杂的神经网络架构运行。 ```

Sure! Here’s the translation while keeping the HTML structure: ```html

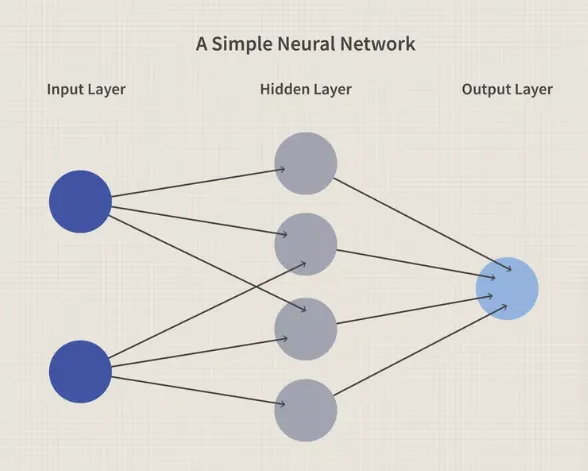

在你点击离开之前,请看一下这张图片,以避免在你的“神经网络”中搞乱事情:

```

Sure, here's the translation of "Neural networks for noobs 👶" in simplified Chinese, while keeping the HTML structure intact: ```html 神经网络入门指南 👶 ``` In this HTML snippet: - `` indicates that the following text is in simplified Chinese. - `神经网络入门指南` translates to "Neural networks beginner's guide." - `👶` is an emoji and remains unchanged as it doesn't require translation.

Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure: ```html

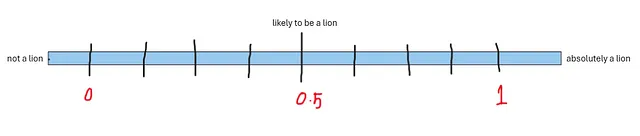

让我们考虑一个由3名学生组成的小组:Bhawna、Rohan和Ujala。他们从未见过狮子的样子,在学校里他们正在接受训练,以辨认狮子的外貌特征(他们的目标)。在训练过程中,他们被告知狮子的特征后,为了得到正确的结果,他们将与狮子完全不同的照片标记为0,与狮子完全匹配的照片标记为1。而介于0和1之间的0.5表示部分像狮子。为了更好地理解,请考虑以下情景:

``` This HTML snippet maintains the structure while providing the translated text in simplified Chinese.

Sure, here's the translated text in simplified Chinese, keeping the HTML structure: ```html

他们分工如下。Bhawna 擅长发现狮子的眼睛,Rohan 擅长发现狮子的鼻子,而 Ujala 擅长发现狮子的嘴。现在他们得到了一张猫的图片,他们的任务是评估他们擅长的每一个特征,评分范围从0到1。他们收集数据并将数据交给他们小组的主管 Deepa,她擅长使用这些数据来判断是否为狮子的面部特征。但有个重要的一点:主管 Deepa 是一个数学极客,她使用以下公式:

``` This HTML snippet contains the translated text in simplified Chinese.Certainly! Here's the HTML structure with the translated text in simplified Chinese: ```html

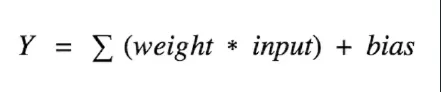

面部结构

面部 = 眼睛(权重 1)+ 鼻子(权重 2)+ 嘴巴(权重 3)

``` In simplified Chinese: ```html面部结构

面部 = 眼睛(权重 1)+ 鼻子(权重 2)+ 嘴巴(权重 3)

``` This HTML document sets the page title to "面部结构" (Facial Structure) and displays the translated text within a paragraph element.Sure, here's the translation of the text into simplified Chinese while keeping the HTML structure: ```html 权重是可能性。或者只是从0到1的数字。 ``` In this translation: - "Weightage" is translated to "权重" (quánzhòng), which means "weight" or "weightage" in the context of importance or significance. - "is the likeliness" is translated to "是可能性" (shì kěnéngxìng), which means "is the likelihood" or "is the probability." - "Or just the number from 0 to 1." is translated to "或者只是从0到1的数字。" (huòzhě zhǐshì cóng líng dào yī de shùzì.), which directly translates to "or just the number from 0 to 1." The `` tag is used to maintain the HTML structure, indicating that the text within it should be treated as inline content.

Sure, here's how you could structure the HTML while including the simplified Chinese translation: ```html

现在,我可以这样表达:

``` In this HTML structure: - `lang="zh-CN"` specifies the language as simplified Chinese. - `` ensures proper encoding for Chinese characters. - `在机器学习领域中,这个公式对于理解神经网络非常有用。将 z 理解为神经元(神经网络的基本单元,也称为节点),x1、x2、xn 是输入值(同时也是神经网络中的节点),w1、w2、wn 是权重。如果一个神经元有4个输入,那么它就有4个权重值,在训练时可以进行调整。

``` In this HTML structure: - `lang="zh-CN"` specifies the language as Simplified Chinese. - The text is enclosed in a `` tag to represent a paragraph. - The content inside `

` is the translated text you provided.

Certainly! Here's the translation of "Gotcha gotcha!!!" into simplified Chinese while keeping the HTML structure intact: ```html 抓住你啦!!! ``` This HTML snippet preserves the structure while displaying the translated text in simplified Chinese.

Certainly! Here's the translation of "What the heck is BIAS? 😱" in simplified Chinese, keeping HTML structure intact: ```html

什么是偏见?😱

``` In this HTML snippet, the text "什么是偏见?😱" represents the translated phrase "What the heck is BIAS? 😱" in simplified Chinese.Sure, here is the HTML structure with the translated text in simplified Chinese: ```html

它是神经元的额外输入,且始终为1。这确保即使所有输入都为0,仍会有某种输出(基本上是神经元中的激活z)。

``` In this structure: - `` is used for paragraph formatting in HTML. - The Chinese text provided is a direct translation of the English text into simplified Chinese.

Certainly! Here's the translation of "Coming back to the lion identification training 🔎" in simplified Chinese, while keeping the HTML structure: ```html 回到狮子识别训练 🔎 ```

Certainly! Here's how you can structure it in HTML and translate the text to simplified Chinese: ```html

让我们假设,以下是结果:

``` In this HTML structure: - `lang="zh-CN"` specifies the language as simplified Chinese. - The text "让我们假设,以下是结果:" is the translation of "Let’s say, following are the results:".Sure, here's the translation in simplified Chinese, keeping the HTML structure intact: ```html Rohan(鼻子):0.5 ```

Sure, here is the HTML structure with the translated text in simplified Chinese: ```html 尤加拉(口腔):0.3 ``` In this HTML snippet: - `` is used to contain the translated text. - `class="translation"` can be used for styling purposes if needed. - `lang="zh-CN"` specifies the language of the text as simplified Chinese.

Sure, here's the translated text in simplified Chinese while keeping the HTML structure: ```html Bhawna(眼睛):0.1 ```

Certainly! Here is the translation in simplified Chinese, while maintaining HTML structure: ```html

最后,Deepa:

``` This HTML snippet translates "Conclusively, Deepa:" into "最后,Deepa:"To translate the given English text "z = eyes*0.1 + nose*0.5 + mouth*0.3 = 0.79" into simplified Chinese while keeping the HTML structure intact, you can use the following code snippet: ```html

z = 眼睛*0.1 + 鼻子*0.5 + 嘴巴*0.3 = 0.79

``` In this HTML snippet: - `` tags are used to enclose the translated Chinese text, assuming it's a paragraph. - 眼睛 (yǎnjīng) represents "eyes", - 鼻子 (bízi) represents "nose", - 嘴巴 (zuǐba) represents "mouth". Make sure the font you are using supports Chinese characters to display correctly on your webpage.

Certainly! Here's the translation of "Hence, it means: the face is a lion’s 🦁" in simplified Chinese while keeping the HTML structure intact: ```html 因此,它的意思是:面容是狮子的 🦁 ``` This HTML snippet preserves the structure while providing the translated text in simplified Chinese.

Sure! Here’s the translation while keeping the HTML structure: ```html

同样,他们从三名不同的学生那里收集狮子身体的数据,他们的导师Priyanka给出的结果是0.4。他们将所有的数据交给首席训练师Nadeem,由他来决定这个生物是否是一只狮子。

```Sure, here's the HTML structure with the translated text in simplified Chinese: ```html

现在,在机器学习领域,Rohan、Ujala和Bhawna是输入层,Deepa是隐藏层,Priyanka是最后的输出层。

``` In simplified Chinese: 现在,在机器学习领域,Rohan、Ujala和Bhawna是输入层,Deepa是隐藏层,Priyanka是最后的输出层。

Sure, here is the translated text in simplified Chinese, maintaining the HTML structure: ```html

就是这么简单!这就是神经网络的工作原理。现在,它是如何训练的呢。

``` In this translation: - "就是这么简单!" translates to "As simple as that!" - "这就是神经网络的工作原理。" translates to "This is how a neural network works." - "现在,它是如何训练的呢。" translates to "Now, how is it trained." This maintains the structure of the HTML paragraph `` tag while providing the translated content in simplified Chinese.

Sure! Here’s the translated text while keeping the HTML structure: ```html

我们在这个真实案例中看到,学生们通过口头告诉他们狮子的特征进行训练。但在机器学习领域,神经网络并不是这样工作的。它们无法像人类那样通过语言或其他方式进行训练。这个过程依赖于猜测。想象一下,候选人什么都不知道,只被告知随机评估特征。一旦整个数据通过隐藏层处理,并最终发送到网络的输出层,结果是:输出是错误的!

```Certainly! Here's the translated text in simplified Chinese, keeping the HTML structure intact: ```html

输出层解释为不正确,因为在我们考虑的例子中,“Nadeem”,在机器学习领域训练神经网络的人类知道面部是狮子的还是不是。因此,它解释数据是正确还是不正确。

``` This HTML snippet contains the translated text in simplified Chinese, maintaining the structure suitable for web content.```html

这个过程由输出层处理。它颠倒了过程并告诉输入层发送的数据是错误的(这被称为反向数据传播)。输入层,或者说候选人,包括Bhawna、Ujala、Rohan,注意到这个错误。他们现在明白,他们认定为狮子的特征实际上不是狮子的特征。因此,下次当展示这些特征时,他们不能将其认定为狮子。这种反向数据传播被重复多次,输入层据此调整其权重(或者说候选人调整他们的思维)。经过看了成千上万张狮子图片后,候选人在检测狮子方面变得更加熟练。这就是——神经网络是如何进行训练的!

``` In simplified Chinese characters: ``` 这个过程由输出层处理。它颠倒了过程并告诉输入层发送的数据是错误的(这被称为反向数据传播)。输入层,或者说候选人,包括Bhawna、Ujala、Rohan,注意到这个错误。他们现在明白,他们认定为狮子的特征实际上不是狮子的特征。因此,下次当展示这些特征时,他们不能将其认定为狮子。这种反向数据传播被重复多次,输入层据此调整其权重(或者说候选人调整他们的思维)。经过看了成千上万张狮子图片后,候选人在检测狮子方面变得更加熟练。这就是——神经网络是如何进行训练的! ```Certainly! Here's the HTML structure with the translated text in simplified Chinese: ```html

好的,现在我们需要熟悉一下transformers:

``` In this HTML snippet, the Chinese text "好的,现在我们需要熟悉一下transformers:" translates to "Alright, now we need to familiarize ourselves with transformers:".Certainly! Here is the translation of the English text to Simplified Chinese, maintaining the HTML structure: ```html Chat GPT 什么都不知道 💁♀️ ```

Certainly! Here's the translated text in simplified Chinese, while keeping the HTML structure intact: ```html ChatGPT,一无所知。它只是“预测”。 ```

Sure, here's the translation in simplified Chinese while keeping the HTML structure intact: ```html 让我们通过变压器来理解如何以及为什么,还有我读过的第一篇也是最酷的研究论文,“Attention is All you Need”; ``` This HTML code retains the original structure and incorporates the translated text in simplified Chinese.

Sure, here's the translation of the text into simplified Chinese, maintaining the HTML structure: ```html

RNNs 在大多数序列到序列任务中使用了 transformers 之前。RNN 存在诸如计算速度慢、梯度消失或爆炸、难以访问长时间信息等问题。现在为什么会出现这些问题,以及原因,不在本文的讨论范围内。如果您对此感兴趣,请点击这里进行探索。从长时间前获取信息是必要的,因为上下文很重要。

``` This HTML snippet preserves the structure while providing the translated text in simplified Chinese.Certainly! Here's the translation of "Info📖: What is a seq2seq task?" in simplified Chinese, while maintaining HTML structure: ```html Info📖: 什么是seq2seq任务? ```

Certainly! Here is the translation in simplified Chinese, while keeping the HTML structure: ```html

Seq2seq 是一类用于自然语言处理的机器学习方法族。应用包括语言翻译、图像字幕、对话模型和文本摘要。Seq2seq 使用序列转换:它将一个序列转换成另一个序列。

``` In this HTML snippet: - `` tags denote a paragraph. - Chinese text is inserted directly between the tags.

Certainly! Here's the translation in simplified Chinese while keeping the HTML structure intact: ```html

项目入门:

``` This HTML snippet translates "Getting started with the project:" into "项目入门:" in simplified Chinese.To translate the provided English text to simplified Chinese while keeping the HTML structure intact, you can use the following: ```html

这个代码库 对像我这样不是机器学习专业人士,只是想玩一玩的人来说,真的很方便。🚀

``` In this translation: - `` and `

` are paragraph tags to structure the text. - `这个代码库` creates a link to the GitHub repository with the Chinese text "这个代码库" meaning "this repository". - "对像我这样不是机器学习专业人士,只是想玩一玩的人来说,真的很方便。🚀" translates the English text into simplified Chinese, conveying the same meaning. Ensure that your HTML document is properly encoded in UTF-8 to display Chinese characters correctly.Sure, here is the HTML structure with the translated text in simplified Chinese: ```html

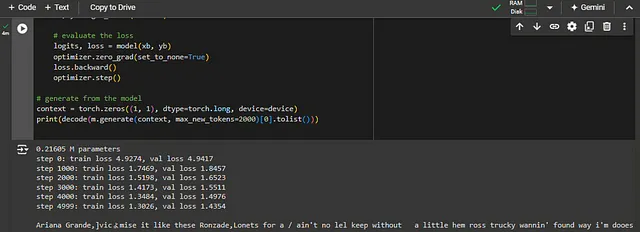

我不是机器学习专家,只是一个想要在这个领域试水的人,对GPT(生成预训练模型)非常着迷。由于无法在我的电脑终端上完成,所以我只是在Karpathy的Google Colab笔记本上进行了一些实验。

``` In this HTML snippet: - `` is used to enclose the translated text, representing a paragraph in HTML. - The Chinese text inside `

` tags is a translation of your provided English text.

import torch

import torch.nn as nn

from torch.nn import functional as F

# hyperparameters

batch_size = 16 # how many independent sequences will we process in parallel?

block_size = 32 # what is the maximum context length for predictions?

max_iters = 5000

eval_interval = 100

learning_rate = 1e-3

device = 'cuda' if torch.cuda.is_available() else 'cpu'

eval_iters = 200

n_embd = 64

n_head = 4

n_layer = 4

dropout = 0.0

# ------------

torch.manual_seed(1337)

# wget https://raw.githubusercontent.com/karpathy/char-rnn/master/data/tinyshakespeare/input.txt

with open('input.txt', 'r', encoding='utf-8') as f:

text = f.read()

# here are all the unique characters that occur in this text

chars = sorted(list(set(text)))

vocab_size = len(chars)

# create a mapping from characters to integers

stoi = { ch:i for i,ch in enumerate(chars) }

itos = { i:ch for i,ch in enumerate(chars) }

encode = lambda s: [stoi[c] for c in s] # encoder: take a string, output a list of integers

decode = lambda l: ''.join([itos[i] for i in l]) # decoder: take a list of integers, output a string

# Train and test splits

data = torch.tensor(encode(text), dtype=torch.long)

n = int(0.9*len(data)) # first 90% will be train, rest val

train_data = data[:n]

val_data = data[n:]

# data loading

def get_batch(split):

# generate a small batch of data of inputs x and targets y

data = train_data if split == 'train' else val_data

ix = torch.randint(len(data) - block_size, (batch_size,))

x = torch.stack([data[i:i+block_size] for i in ix])

y = torch.stack([data[i+1:i+block_size+1] for i in ix])

x, y = x.to(device), y.to(device)

return x, y

@torch.no_grad()

def estimate_loss():

out = {}

model.eval()

for split in ['train', 'val']:

losses = torch.zeros(eval_iters)

for k in range(eval_iters):

X, Y = get_batch(split)

logits, loss = model(X, Y)

losses[k] = loss.item()

out[split] = losses.mean()

model.train()

return out

class Head(nn.Module):

""" one head of self-attention """

def __init__(self, head_size):

super().__init__()

self.key = nn.Linear(n_embd, head_size, bias=False)

self.query = nn.Linear(n_embd, head_size, bias=False)

self.value = nn.Linear(n_embd, head_size, bias=False)

self.register_buffer('tril', torch.tril(torch.ones(block_size, block_size)))

self.dropout = nn.Dropout(dropout)

def forward(self, x):

B,T,C = x.shape

k = self.key(x) # (B,T,C)

q = self.query(x) # (B,T,C)

# compute attention scores ("affinities")

wei = q @ k.transpose(-2,-1) * C**-0.5 # (B, T, C) @ (B, C, T) -> (B, T, T)

wei = wei.masked_fill(self.tril[:T, :T] == 0, float('-inf')) # (B, T, T)

wei = F.softmax(wei, dim=-1) # (B, T, T)

wei = self.dropout(wei)

# perform the weighted aggregation of the values

v = self.value(x) # (B,T,C)

out = wei @ v # (B, T, T) @ (B, T, C) -> (B, T, C)

return out

class MultiHeadAttention(nn.Module):

""" multiple heads of self-attention in parallel """

def __init__(self, num_heads, head_size):

super().__init__()

self.heads = nn.ModuleList([Head(head_size) for _ in range(num_heads)])

self.proj = nn.Linear(n_embd, n_embd)

self.dropout = nn.Dropout(dropout)

def forward(self, x):

out = torch.cat([h(x) for h in self.heads], dim=-1)

out = self.dropout(self.proj(out))

return out

class FeedFoward(nn.Module):

""" a simple linear layer followed by a non-linearity """

def __init__(self, n_embd):

super().__init__()

self.net = nn.Sequential(

nn.Linear(n_embd, 4 * n_embd),

nn.ReLU(),

nn.Linear(4 * n_embd, n_embd),

nn.Dropout(dropout),

)

def forward(self, x):

return self.net(x)

class Block(nn.Module):

""" Transformer block: communication followed by computation """

def __init__(self, n_embd, n_head):

# n_embd: embedding dimension, n_head: the number of heads we'd like

super().__init__()

head_size = n_embd // n_head

self.sa = MultiHeadAttention(n_head, head_size)

self.ffwd = FeedFoward(n_embd)

self.ln1 = nn.LayerNorm(n_embd)

self.ln2 = nn.LayerNorm(n_embd)

def forward(self, x):

x = x + self.sa(self.ln1(x))

x = x + self.ffwd(self.ln2(x))

return x

# super simple bigram model

class BigramLanguageModel(nn.Module):

def __init__(self):

super().__init__()

# each token directly reads off the logits for the next token from a lookup table

self.token_embedding_table = nn.Embedding(vocab_size, n_embd)

self.position_embedding_table = nn.Embedding(block_size, n_embd)

self.blocks = nn.Sequential(*[Block(n_embd, n_head=n_head) for _ in range(n_layer)])

self.ln_f = nn.LayerNorm(n_embd) # final layer norm

self.lm_head = nn.Linear(n_embd, vocab_size)

def forward(self, idx, targets=None):

B, T = idx.shape

# idx and targets are both (B,T) tensor of integers

tok_emb = self.token_embedding_table(idx) # (B,T,C)

pos_emb = self.position_embedding_table(torch.arange(T, device=device)) # (T,C)

x = tok_emb + pos_emb # (B,T,C)

x = self.blocks(x) # (B,T,C)

x = self.ln_f(x) # (B,T,C)

logits = self.lm_head(x) # (B,T,vocab_size)

if targets is None:

loss = None

else:

B, T, C = logits.shape

logits = logits.view(B*T, C)

targets = targets.view(B*T)

loss = F.cross_entropy(logits, targets)

return logits, loss

def generate(self, idx, max_new_tokens):

# idx is (B, T) array of indices in the current context

for _ in range(max_new_tokens):

# crop idx to the last block_size tokens

idx_cond = idx[:, -block_size:]

# get the predictions

logits, loss = self(idx_cond)

# focus only on the last time step

logits = logits[:, -1, :] # becomes (B, C)

# apply softmax to get probabilities

probs = F.softmax(logits, dim=-1) # (B, C)

# sample from the distribution

idx_next = torch.multinomial(probs, num_samples=1) # (B, 1)

# append sampled index to the running sequence

idx = torch.cat((idx, idx_next), dim=1) # (B, T+1)

return idx

model = BigramLanguageModel()

m = model.to(device)

# print the number of parameters in the model

print(sum(p.numel() for p in m.parameters())/1e6, 'M parameters')

# create a PyTorch optimizer

optimizer = torch.optim.AdamW(model.parameters(), lr=learning_rate)

for iter in range(max_iters):

# every once in a while evaluate the loss on train and val sets

if iter % eval_interval == 0 or iter == max_iters - 1:

losses = estimate_loss()

print(f"step {iter}: train loss {losses['train']:.4f}, val loss {losses['val']:.4f}")

# sample a batch of data

xb, yb = get_batch('train')

# evaluate the loss

logits, loss = model(xb, yb)

optimizer.zero_grad(set_to_none=True)

loss.backward()

optimizer.step()

# generate from the model

context = torch.zeros((1, 1), dtype=torch.long, device=device)

print(decode(m.generate(context, max_new_tokens=2000)[0].tolist())

Sure, here's the translated text in simplified Chinese while maintaining HTML structure: ```html

尝试一些属于你自己的东西:

```Certainly! Here is the English text translated to Simplified Chinese while keeping the HTML structure intact: ```html Go to Kaggle and download a dataset of your liking. Then, from the code above (line # 22): 去 Kaggle 下载你喜欢的数据集。然后,从上面的代码中(第 22 行): ``` Here's a detailed breakdown for context if needed: - **Go to Kaggle and download a dataset of your liking.** **去 Kaggle 下载你喜欢的数据集。** - **Then, from the code above (line # 22):** **然后,从上面的代码中(第 22 行):** If you need any further adjustments or additions, feel free to ask!

with open('input.txt', 'r', encoding='utf-8') as f:

text = f.read()

Sure, here's the HTML structure with the translated text in simplified Chinese: ```html

替换input.txt为您的数据集文件名/路径。例如,我使用了Ariana Grande的歌词数据集,我的Baby GPT胡言乱语成了Arianna。

``` In this HTML snippet: - `` tags are used for paragraph formatting. - The text is translated into simplified Chinese as requested.

with open('/content/sample_data/ArianaGrande.csv', 'r', encoding='utf-8') as f:

text = f.read()

Sure, here is the translated text in simplified Chinese, keeping the HTML structure intact: ```html

所以你看到这个小GPT瞎扯阿琳娜。经过更多的训练,它有点吐出可以理解的文字,但并不是完全全面的歌词!

```在我的视频中可以看到更交互式的解释:

Sure, here's the translated text in simplified Chinese while maintaining HTML structure: ```html

这就是我这边的一切 —— 下次见啦 🤩

``` This HTML snippet translates to: "That's it from my end — see you in the next one 🤩".