Sure, here is the translation of "A Chatbot for Your Tax Return — Part 1: Intro & Web Crawler" into simplified Chinese, while preserving HTML structure: ```html 税务申报聊天机器人 — 第1部分:简介与网络爬虫 ```

在保持HTML结构的前提下,将以下英文文本翻译为简体中文: 第一部分:为ATO创建一个网页抓取器。

Sure, here's the translation in simplified Chinese, keeping the HTML structure intact: ```html

第二部分:使用GPT和LLama3构建本地聊天机器人。

```Sure, here's the text translated into simplified Chinese while keeping the HTML structure: ```html

第三部分:使用Groq和Streamlit Community将事物部署到云端。

```Certainly! Here is the translated text in simplified Chinese, keeping the HTML structure intact: ```html

报税可能是一项令人望而却步的任务。作为在澳大利亚居住的人,我惊讶地发现没有易于使用的工具可以帮助解决以下问题:

``` This HTML code translates to: "报税可能是一项令人望而却步的任务。作为在澳大利亚居住的人,我惊讶地发现没有易于使用的工具可以帮助解决以下问题:"- Sure, here's the simplified Chinese translation of "Answering questions about tax rules, laws and regulations" while keeping the HTML structure intact: ```html 回答关于税收规则、法律和法规的问题 ```

- 在 HTML 结构中保持不变,将以下英文文本翻译为简体中文: 申报税务

Sure, here's the translation of the text into simplified Chinese, while keeping the HTML structure: ```html

澳大利亚人可以在财政年度结束后申报他们的税务申报表,该财政年度从7月1日持续到次年的6月30日。通常,正式的税务申报期从7月1日开始,申报的最后期限为10月31日,逾期将会面临罚款。最简单的方法是通过ATO网站进行(假设ATO已经链接到myGov)。

``` This HTML snippet contains the translated text in simplified Chinese, ready for use in a web context.Sure, here is the translated text in simplified Chinese while maintaining HTML structure: ```html 解决(2)要稍微复杂一些,我们在这个系列中不打算涉及。现在,让我们先专注于(1)。 ``` This HTML structure ensures that the translated Chinese text maintains its proper formatting and appearance when displayed on a webpage.

Sure, here's the translation in simplified Chinese, while keeping the HTML structure intact: ```html

我们在这些系列中使用ATO和ATO的网站,但在这里讨论的相同技术可以用于任何其他国家的税务机构。

``` This HTML snippet translates the English text into simplified Chinese and maintains the structure suitable for displaying on a webpage.

Sure, here's the translation of "What can I use already?" in simplified Chinese, while maintaining the HTML structure: ```html 我现在能用什么? ``` This HTML snippet ensures the text "我现在能用什么?" (What can I use already?) is properly displayed and recognized as simplified Chinese.

Here’s the translation with HTML structure preserved: ```html There are a few options: 有几个选项: ```

- Sure, here is the translated text in simplified Chinese while maintaining the HTML structure: ```html 使用LLM。ChatGPT或Perplexity在回复之前搜索网络,做得非常好。 ``` This translates to: ```plaintext 使用LLM。ChatGPT或Perplexity在回复之前搜索网络,做得非常好。 ```

- Sure, here is the text translated into simplified Chinese while keeping the HTML structure intact: ```html 询问会计师 ```

- 在保持HTML结构的前提下,将以下英文文本翻译成简体中文: 有一些新成立的公司,比如TaxGPT(澳洲版),未来可能会提供问答服务,但目前它们似乎更专注于为会计师提供帮助,而不是面向普通公众。

- Sure, here's the translation in simplified Chinese while keeping the HTML structure intact:

```html

直接给ATO发送电子邮件或留言。他们可以提供帮助。不过,可能需要几天时间才能收到他们的回复。

``` This HTML snippet translates the English text "Email or message ATO directly. They can help. However, It might take a few days for them to get back to you." into simplified Chinese. - To translate the given English text into simplified Chinese while keeping the HTML structure intact, you can use the following: ```html [更新] 我刚刚注意到ATO现在有一个聊天机器人功能。不过,它似乎不是最智能的,更像是一种老式的聊天机器人。 ``` In this translation: - `[更新]` is enclosed in `` tags to maintain structure and style. - Chinese characters are used for the translation of the text. Ensure that your HTML structure allows for proper display of Chinese characters if you are embedding this into a webpage.

To translate "So, what can I really use?" into simplified Chinese while keeping the HTML structure, you would write: ```html 那么,我真正可以使用什么呢? ``` This HTML code maintains the language attribute for simplified Chinese (`lang="zh-CN"`) and encapsulates the translated text within a `` tag.

在HTML结构中保持不变,将以下英文文本翻译为简体中文: 使用LLM。它们足够好。

Sure, here's the translated text in simplified Chinese while keeping the HTML structure: ```html

那么,我们需要建立一个新的聊天机器人吗?我认为是的。然而,要建立一个比如 GPT-4 更准确和高效的优秀聊天机器人是一项艰巨的任务。我将向您展示如何开始。然而,在聊天机器人能够自信地使用之前,它需要大量的优化。

``` This HTML snippet maintains the structure and faithfully translates the provided English text into simplified Chinese.Certainly! Here is the translation of "Steps" into simplified Chinese, while keeping the HTML structure: ```html 步骤 ``` This HTML code will display "步骤" in simplified Chinese characters on a web page.

Sure, here's the translated text in simplified Chinese while keeping the HTML structure intact: ```html

这些是我们将要遵循的步骤:

```- Sure, here is the simplified Chinese translation of the text, keeping the HTML structure intact:

```html

创建一个爬虫,用来从ATO网站上抓取数据。我们将使用Scrapy和Selenium。这些数据将稍后被输入到一个LLM中。

```

In this HTML snippet, the translated Chinese text is encapsulated within `` tags, maintaining the structure as requested.

- Sure, here's the translation of the text into simplified Chinese, while maintaining the HTML structure: ```html

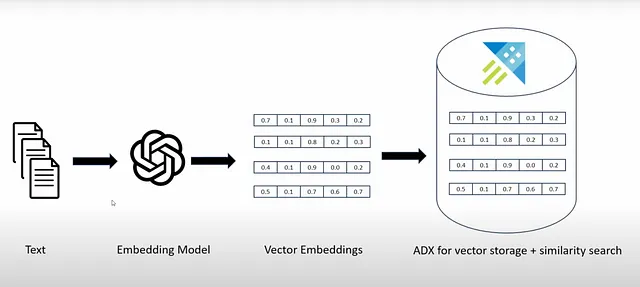

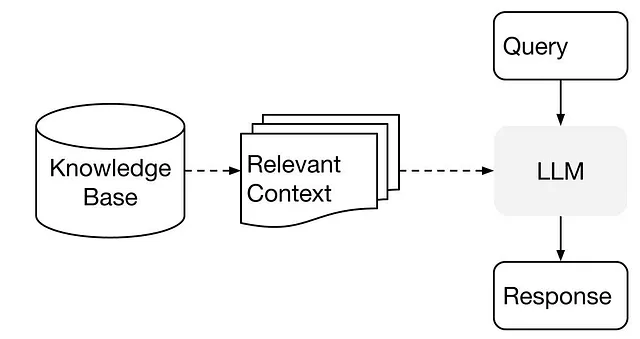

通过Llama-index创建一个RAG。我将向您展示如何在本地运行或者将您的索引存储在像Pinecone这样的向量数据库上。我们将尝试使用GPT和LLama3来训练我们的LLM模型。

``` In simplified Chinese characters: ```html通过Llama-index创建一个RAG。我将向您展示如何在本地运行或者将您的索引存储在像Pinecone这样的向量数据库上。我们将尝试使用GPT和LLama3来训练我们的LLM模型。

``` This translation preserves the HTML tags while converting the English text into simplified Chinese.- 在使用Streamlit构建聊天界面。

在保持HTML结构的情况下,将以下英文文本翻译为简体中文: 这些系列包含三个部分:

在这里,您正在查看它。我们将在这里涵盖抓取部分。

Sure, here's the translated text in simplified Chinese while keeping the HTML structure: ```html

第二部分:使用GPT和LLama3构建本地聊天机器人。

```Sure, here is the translation of the text "Part 3: Deploying things onto the cloud using Groq and Streamlit Community" into simplified Chinese while keeping the HTML structure: ```html

第三部分:使用Groq和Streamlit Community将事物部署到云端。

```在这里你可以找到我们讨论过的所有Python代码在Github上。

Sure, here's the translation of "Step 1: Creating the Scraper" into simplified Chinese, while keeping the HTML structure: ```html Step 1: 创建网页抓取器 ``` In this HTML snippet, `创建网页抓取器` translates to "Creating the Scraper" in simplified Chinese.

To translate "We’re going to use Python. Let’s install Scrapy." into simplified Chinese while keeping the HTML structure, you can represent it like this: ```html 我们将要使用 Python。让我们安装 Scrapy。 ``` In this HTML snippet: - "我们将要使用 Python。" translates to "We're going to use Python." - "让我们安装 Scrapy。" translates to "Let's install Scrapy." Make sure your HTML document uses UTF-8 encoding to display Chinese characters correctly.

pip install scrapyHere is the text translated into simplified Chinese, keeping the HTML structure intact: ```html 现在,创建一个新的scrapy项目。 ``` This HTML structure ensures that the translated text can be easily integrated into a web page or document.

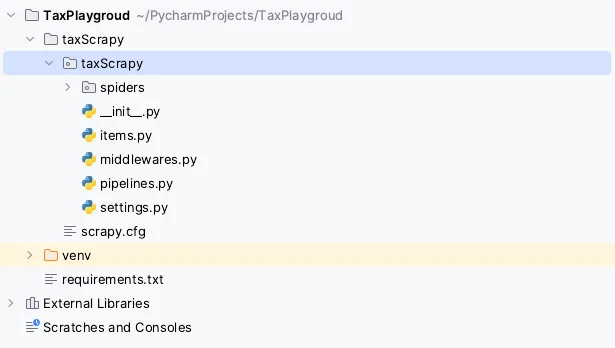

scrapy startproject taxScrapy这将创建一个新目录。

scrapy project Sure, here's the text translated into simplified Chinese while keeping the HTML structure: ```html

该目录包含运行网页抓取管道所需的所有样板代码。稍后我们将自定义这些文件。

``` In this HTML snippet, `` tags are used to enclose the translated text, which means "This directory contains all the boilerplate code for running a scraping pipeline. We will customize these files later."

To keep the HTML structure intact while translating the text into simplified Chinese, you can use the following: ```html

现在,创建一个新的爬虫。爬虫包含了运行爬取的代理代码。

``` In this HTML snippet: - `` tags are used to enclose the translated text, indicating a paragraph. - The Chinese text is the translation of "Now, create a new spider. A spider contains the code for the agent running the crawl." Make sure to embed this HTML snippet within your overall HTML structure as needed.

cd taxScrapy

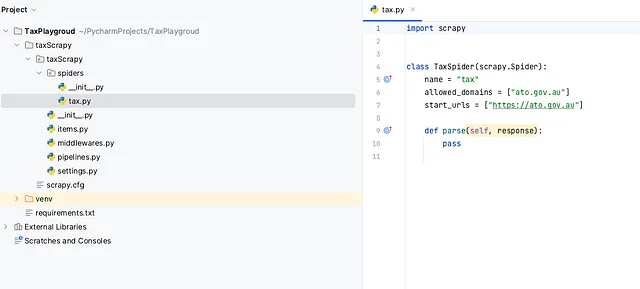

scrapy genspider tax https://ato.gov.au请随意使用任何与您所居住国家更相关的网站。

tax.py 在保持HTML结构不变的情况下,将以下英文文本翻译为简体中文: 让我们修改`parse`函数。

def parse(self, response):

page_text = response.xpath("//body//text()").getall()

page_text = " ".join(page_text).strip()

yield {

"url": response.url,

"text": page_text

}在保留HTML结构的情况下,将以下英文文本翻译为简体中文: 运行爬虫程序:

scrapy crawl tax -o output.json在保持HTML结构的前提下,将以下英文文本翻译成简体中文: 这将创建一个 output.json 文件,其内容遵循我们在解析函数中定义的结构。

Sure, here's the translation of the text into simplified Chinese while keeping the HTML structure: ```html

好的,现在的问题是我们如何导航到从主页链接的其他页面?我们在这里有一些要求:

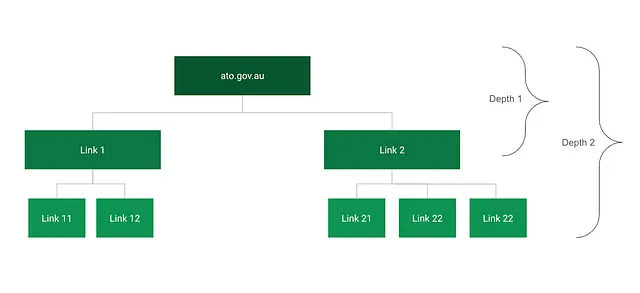

``` This HTML snippet retains the structure while presenting the text in simplified Chinese.- 递归抓取从主页开始

- 在保持HTML结构的情况下,将以下英文文本翻译为简体中文: 限制我们的爬行深度,以防止蜘蛛永无止境地继续下去。

what scraping depth means 在保持HTML结构的情况下,将以下英文文本翻译成简体中文: 3. 输出到多个文件而不是一个文件。我们不希望单个文件变得太大以至于无法打开。

好的,让我们相应地修改代码。

To translate "tax.py" to simplified Chinese while keeping the HTML structure, you would format it as follows: ```html tax.py ``` This HTML structure ensures that the text "tax.py" is identified as simplified Chinese, allowing browsers and search engines to interpret it correctly.

import scrapy # Importing Scrapy for web scraping

from w3lib.html import remove_tags # Importing a utility to remove HTML tags from a string

# Define a spider class for scraping tax-related information from the ATO website

class TaxSpider(scrapy.Spider):

name = "tax" # Name of the spider

allowed_domains = ["ato.gov.au"] # Domains allowed to be scraped

start_urls = ["https://www.ato.gov.au/"] # Starting URL for the spider

def __init__(self, max_depth=1, *args, **kwargs):

super(TaxSpider, self).__init__(*args, **kwargs)

self.max_depth = int(max_depth) # Maximum depth for crawling, converted to an integer

self.visited_urls = set() # Set to keep track of visited URLs

# Main parsing method

def parse(self, response):

current_depth = response.meta.get('depth', 0) # Get the current depth from the response meta data

# Extract and clean text from the page

page_text = response.xpath("//body//text()").getall()

page_text = ' '.join(page_text).strip()

page_text = remove_tags(page_text)

# Yield a dictionary containing the URL, the cleaned text, and the current depth

yield {

'url': response.url,

'text': page_text,

'depth': current_depth

}

self.visited_urls.add(response.url) # Add the current URL to the set of visited URLs

# If the current depth is less than the maximum depth, continue crawling

if current_depth < self.max_depth:

links = response.xpath("//a/@href").getall() # Extract all href attributes from anchor tags

for link in links:

# Normalize the link to a full URL

if link.startswith('http'):

next_page = link

else:

next_page = response.urljoin(link)

# Check if the next page URL is allowed by the domains

if any(domain in next_page for domain in self.allowed_domains):

# Yield a new request for the next page, increasing the depth by 1

yield scrapy.Request(next_page, callback=self.parse, meta={'depth': current_depth + 1})Sure, the translation of "pipelines.py" to simplified Chinese, while keeping the HTML structure, would be: ```html

pipelines.py

```# Define your item pipelines here

#

# Do not forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

import os # Importing os module for creating directories and handling file paths

import json # Importing json module for writing items to JSON files

# Define a pipeline class for processing scraped items

class TaxscrapyPipeline:

def open_spider(self, spider):

# This method is called when the spider is opened

self.file_index = 1 # Initialize a file index to keep track of the output files

def process_item(self, item, spider):

# Define the directory where the output files will be saved

directory = "output_files"

if not os.path.exists(directory):

os.makedirs(directory) # Create the directory if it does not exist

# Define the filename for the current item using the file index

filename = os.path.join(directory, f'output_{self.file_index}.json')

# Open the file and write the item as a JSON string

with open(filename, 'w') as f:

f.write(json.dumps(dict(item)) + "\n")

# Increment the file index for the next item

self.file_index += 1

return item # Return the item for further processing if neededSure, here is the translated text in simplified Chinese: ```html 也将以下内容添加到settings.py的末尾。 ```

ITEM_PIPELINES = {

'taxScrapy.pipelines.TaxscrapyPipeline': 1,

}To keep the HTML structure intact while translating "To run:" into simplified Chinese, you can use the following: ```html 运行: ``` This HTML snippet ensures that the text "To run:" is displayed in simplified Chinese characters, while maintaining the structure of your HTML document.

scrapy crawl tax -a max_depth=1Here is the translated text in simplified Chinese, while keeping the HTML structure intact: ```html

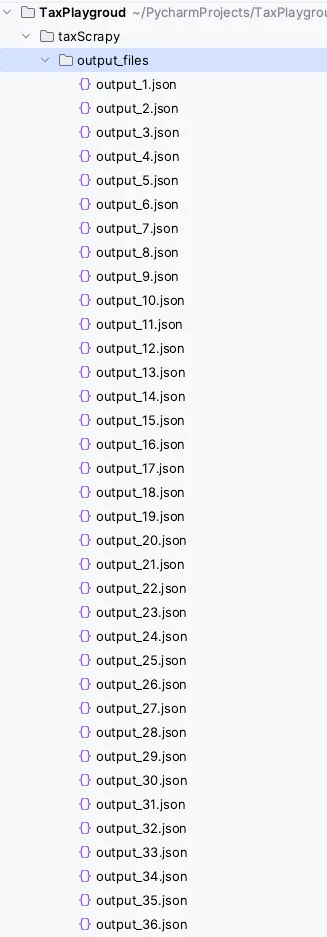

正如您所见,我们删除了输出参数,因为输出路径现在已经硬编码在pipelines.py中。请随意调整max_depth参数,并查看收集数据所需的时间。

```

scraping output Sure, here's the translation in simplified Chinese while maintaining HTML structure: ```html 这是我们收集数据以建立我们的RAG的方法。每个文件与一个页面相关。 ``` This HTML text will display the translated Chinese text while preserving the structure of an HTML document.

进一步优化

Sure, here's the translation of the text into simplified Chinese, keeping the HTML structure: ```html

我们都听过著名的谚语“垃圾进, 垃圾出”。风险评估图表(RAGs)也不例外。有很多方法可以提升这些数据点的质量。以下是一些建议:

``` This HTML snippet translates the English text while preserving the structure for web content.- 在保持 HTML 结构不变的情况下,将以下英文文本翻译成简体中文: 不要捕获页面中的所有文本。只提取你感兴趣的部分。例如,你可以在解析方法中添加以下逻辑,这对于 ATO 非常有效。

page_text = response.xpath(

"//p[not(ancestor::header) and not(ancestor::*[contains(@id, 'nav')])]//text()").getall()

page_text = [text.strip() for text in page_text if

not ("Traditional Owners and Custodians" in text or "RSS news feeds" in text)]

page_text = '\n'.join(page_text).strip()

page_text = remove_tags(page_text)Here's the translation while keeping the HTML structure: ```html 这种逻辑高度依赖于被抓取的网站及其HTML结构。 ```

To translate the given English text "Use scrapy-splash to render javascript for pages containing js. Scrapy by default does not support that." into simplified Chinese while keeping the HTML structure, you can present it like this: ```html

使用 scrapy-splash 渲染包含 JavaScript 的页面。Scrapy 默认情况下不支持这一功能。

``` This HTML structure maintains the original sentence structure while providing the translated text in simplified Chinese.Sure, here's the translated text in simplified Chinese, keeping the HTML structure intact: ```html 3. 使用 Selenium 模拟更复杂的行为。例如,爬取澳大利亚税务局法律数据库需要在 DOM 内点击和展开许多项目。以下是执行此操作并将所有单独页面链接输出到文件的代码片段。 ``` Please note that this translation assumes "ATO's Legal Database" refers to the Australian Taxation Office's legal database. If "ATO" refers to something else in context, the translation might need adjustment accordingly.

import org.openqa.selenium.By; // Importing Selenium's By class for locating elements

import org.openqa.selenium.JavascriptExecutor; // Importing Selenium's JavascriptExecutor for executing JavaScript

import org.openqa.selenium.Keys; // Importing Selenium's Keys class for keyboard actions

import org.openqa.selenium.WebDriver; // Importing Selenium's WebDriver interface for web browser control

import org.openqa.selenium.WebElement; // Importing Selenium's WebElement interface for interacting with elements

import org.openqa.selenium.chrome.ChromeDriver; // Importing Selenium's ChromeDriver for Chrome browser automation

import org.openqa.selenium.chrome.ChromeOptions; // Importing Selenium's ChromeOptions for Chrome-specific options

import org.openqa.selenium.interactions.Actions; // Importing Selenium's Actions class for advanced interactions

import java.io.BufferedWriter; // Importing Java's BufferedWriter for writing to files

import java.io.FileWriter; // Importing Java's FileWriter for writing to files

import java.io.IOException; // Importing Java's IOException for handling IO exceptions

import java.util.*; // Importing Java's utility classes

public class WebScraper {

// URL of the website to be scraped

private static final String START_URL = "https://www.ato.gov.au/single-page-applications/legaldatabase#Law/table-of-contents";

private static WebDriver driver; // WebDriver instance for controlling the browser

private static Set<String> urls = new HashSet<>(); // Set to store captured URLs

private static Set<String> visitedXpaths = new HashSet<>(); // Set to store visited XPath expressions

private static JavascriptExecutor js; // JavascriptExecutor instance for executing JavaScript

private static final String OUTPUT_FILE = "captured_urls.txt"; // Output file for captured URLs

static Random rand = new Random(); // Random instance for generating random numbers

public static void main(String[] args) throws InterruptedException {

// Setting the path to the ChromeDriver executable

System.setProperty("webdriver.chrome.driver", "path to the chrome driver file");

ChromeOptions options = new ChromeOptions();

options.addArguments("--start-maximized"); // Start the browser maximized

driver = new ChromeDriver(options); // Initialize the ChromeDriver

js = (JavascriptExecutor) driver; // Initialize the JavascriptExecutor

try (BufferedWriter writer = new BufferedWriter(new FileWriter(OUTPUT_FILE, true))) {

driver.get(START_URL); // Open the start URL

Thread.sleep(10000); // Wait for potential redirects or DOM updates

System.out.println("Starting to expand and capture URLs...");

expandAndCaptureUrls(writer); // Start expanding and capturing URLs

} catch (IOException e) {

e.printStackTrace(); // Print any IO exceptions

} finally {

driver.quit(); // Quit the WebDriver

}

// Print all captured URLs

System.out.println("Captured URLs:");

for (String url : urls) {

System.out.println(url);

}

}

private static void expandAndCaptureUrls(BufferedWriter writer) throws IOException {

while (true) {

// Find all elements with the class "fancytree-title"

List<WebElement> elements = new ArrayList<>(driver.findElements(By.className("fancytree-title")));

boolean atLeastOneNew = false; // Flag to check if at least one new element is found

for (WebElement element : elements) {

String xpath = getXPath(element); // Get the XPath of the element

if (!visitedXpaths.contains(xpath)) {

atLeastOneNew = true;

visitedXpaths.add(xpath); // Add the XPath to the set of visited XPaths

String titlePath = getTitlePath(element); // Get the title path of the element

System.out.println("Clicking element with XPath: " + xpath);

System.out.println("Title Path: " + titlePath);

try {

// Hold Command (or Control) key and click to open in a new tab

Actions actions = new Actions(driver);

String os = System.getProperty("os.name").toLowerCase();

if (os.contains("mac")) {

actions.keyDown(Keys.COMMAND).click(element).keyUp(Keys.COMMAND).build().perform();

} else {

actions.keyDown(Keys.CONTROL).click(element).keyUp(Keys.CONTROL).build().perform();

}

int randomNum = rand.nextInt(3) + 1;

Thread.sleep(randomNum * 1000); // Wait for the new tab to open

// Capture the current window handles

ArrayList<String> tabs = new ArrayList<>(driver.getWindowHandles());

if (tabs.size() > 1) {

// Switch to the new tab and capture the URL

driver.switchTo().window(tabs.get(1)); // Switch to the new tab

String currentUrl = driver.getCurrentUrl();

System.out.println("Captured URL: " + currentUrl);

System.out.println("Title Path: " + titlePath);

urls.add(currentUrl); // Add the captured URL to the set of URLs

// Write the URL and title path to the file

writer.write(currentUrl + " | " + titlePath);

writer.newLine();

writer.flush();

// Close the new tab and switch back to the original tab

driver.close();

driver.switchTo().window(tabs.get(0)); // Switch back to the original tab

}

} catch (Exception e) {

e.printStackTrace(); // Print any exceptions

}

}

}

if (!atLeastOneNew) {

break; // Exit the loop if no new elements are found

}

}

}

private static String getTitlePath(WebElement element) {

StringBuilder titlePath = new StringBuilder();

WebElement currentElement = element;

int tolerance = 7; // Tolerance for missing titles

while (currentElement != null && tolerance > 0) {

String title = currentElement.getAttribute("title");

if (title != null && !title.isEmpty()) {

if (!titlePath.isEmpty()) {

titlePath.insert(0, " - ");

}

titlePath.insert(0, title);

} else tolerance--;

currentElement = currentElement.findElement(By.xpath("..")); // Move to the parent element

}

return titlePath.toString();

}

private static String getXPath(WebElement element) {

return (String) js.executeScript(

"function getElementXPath(element) {" +

" if (element.id !== '') {" +

" return 'id(\"' + element.id + '\")';" +

" }" +

" if (element === document.body) {" +

" return element.tagName;" +

" }" +

" var ix = 0;" +

" var siblings = element.parentNode.childNodes;" +

" for (var i = 0; i < siblings.length; i++) {" +

" var sibling = siblings[i];" +

" if (sibling === element) {" +

" return getElementXPath(element.parentNode) + '/' + element.tagName + '[' + (ix + 1) + ']';" +

" }" +

" if (sibling.nodeType === 1 && sibling.tagName === element.tagName) {" +

" ix++;" +

" }" +

" }" +

" return '';" +

"}" +

"return getElementXPath(arguments[0]);",

element);

}

}Sure, here is the translated text in simplified Chinese: 你可以使用 Selenium 或 Scrapy 逐个浏览链接并捕获它们的内容。请注意,本地运行上述代码花了我几天时间来生成大量链接,这些链接可以用于我的 RAG。

Sure, the translation of "What to Do Next?" into simplified Chinese while keeping the HTML structure intact would be: ```html

接下来该做什么?

``` In this HTML snippet: - `` denotes a paragraph tag in HTML. - `接下来该做什么?` is the simplified Chinese translation of "What to Do Next?"

Sure, here's the simplified Chinese translation of your text, keeping the HTML structure: ```html 在第二部分,我们将利用刚刚抓取的数据构建一个本地聊天机器人。 ``` This HTML code embeds the Chinese text in a format that ensures it displays correctly within an HTML document.

To translate "Code" into simplified Chinese while keeping the HTML structure intact, you would use the following: ```html

代码``` In this HTML snippet: - `` indicates that the enclosed text should be treated as computer code. - `代码` is the simplified Chinese translation of "Code". So, `代码` would display as "代码" on a web page, indicating that it represents code or programming instructions.To translate the English text on the GitHub page you mentioned to simplified Chinese while keeping the HTML structure intact, you can use the following code snippet: ```html

Tax-GPT GitHub 页面翻译 欢迎访问 Tax-GPT GitHub 页面

您可以在 这里 查看原始页面。

以下是页面的简体中文翻译:

简体中文翻译内容……

``` Replace `"简体中文翻译内容……"` with the actual translated content of the GitHub page. Ensure that all Chinese characters are correctly encoded in UTF-8 to display properly in the browser. This structure maintains the basic HTML layout while incorporating the translated content into the body of the page. - Sure, here's the translation of the text into simplified Chinese, while maintaining the HTML structure: ```html